LEARNER (Learning Environments with Advanced Robotics for Next-generation Emergency Responders)

Sponsor: NSF – Convergence Accelerator

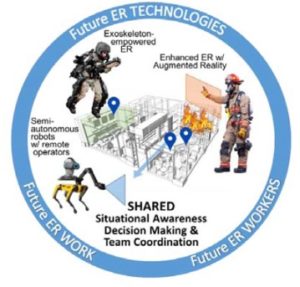

Human augmentation technologies such as robotics and augmented reality have the potential to dramatically transform the landscape of emergency response (ER) work. Specifically, collaborative semi-autonomous ground robots can potentially aid surveillance as well as search and rescue operations, powered exoskeletons can augment human physical capacity while still preserving human autonomy, and augmented reality can enhance ER worker cognition both as a tool in itself to support wayfinding, situational collaboration and decision making, but also as a means to operate robots and exoskeletons. The core of this work examines context-sensitive and use-inspired combinations of these augmentation technologies in emergency response to benefit both ER work and workers.

Human augmentation technologies such as robotics and augmented reality have the potential to dramatically transform the landscape of emergency response (ER) work. Specifically, collaborative semi-autonomous ground robots can potentially aid surveillance as well as search and rescue operations, powered exoskeletons can augment human physical capacity while still preserving human autonomy, and augmented reality can enhance ER worker cognition both as a tool in itself to support wayfinding, situational collaboration and decision making, but also as a means to operate robots and exoskeletons. The core of this work examines context-sensitive and use-inspired combinations of these augmentation technologies in emergency response to benefit both ER work and workers.

Collaborators: TAMU (Moats, Murphy), Virginia Tech (Gabbard, Srinivasan, Leonessa), University of Florida (Du)

AMELIA (AugMEnted Learning InnovAtion)

Sponsor: NSF – Cyber Human Systems

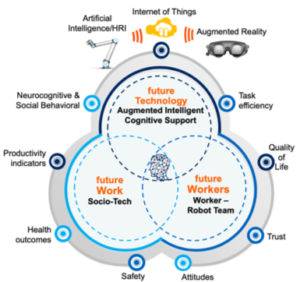

Augmenting Human Cognition with Collaborative Robots. This research will contribute new knowledge and theory of Human-Computer Interaction and Human-Robot Interaction (HRI), by augmenting human cognition for safer and more efficient collaborative robot interaction. The team plans to: (1) develop a novel HRI task/scenario classification scheme in collaborative robotics environments vulnerable to observable systems failures; (2) establish fundamental neurophysiological, cognitive, and socio-behavioral capability models (e.g., workload, cognitive load, fatigue/stress, affect, and trust) during these HRI (i.e., the mind motor machine nexus); (3) use these models to determine when and how a human’s cognitive, social, behavioral and environmental states require adjustment via technology to enhance HRI for efficient and safe work performance; and finally (4) create an innovative and transformative Work 4.0 architecture (AMELIA: AugMEnted Learning InnovAtion) that includes a layer of augmented reality (AR) for human and robots to mutually learn and communicate current states.

Augmenting Human Cognition with Collaborative Robots. This research will contribute new knowledge and theory of Human-Computer Interaction and Human-Robot Interaction (HRI), by augmenting human cognition for safer and more efficient collaborative robot interaction. The team plans to: (1) develop a novel HRI task/scenario classification scheme in collaborative robotics environments vulnerable to observable systems failures; (2) establish fundamental neurophysiological, cognitive, and socio-behavioral capability models (e.g., workload, cognitive load, fatigue/stress, affect, and trust) during these HRI (i.e., the mind motor machine nexus); (3) use these models to determine when and how a human’s cognitive, social, behavioral and environmental states require adjustment via technology to enhance HRI for efficient and safe work performance; and finally (4) create an innovative and transformative Work 4.0 architecture (AMELIA: AugMEnted Learning InnovAtion) that includes a layer of augmented reality (AR) for human and robots to mutually learn and communicate current states.

Collaborators: Montana State University (Stanley, Whitke), Clemson University

HRI during Disaster Response

Sponsor: NSF

The objective of the project is to systematically explore how human-robotic interactions are affected during disaster recovery, specifically pertaining to operator states related to fatigue, stress, and insufficient training. Through this project, we have collected operator physiological and cognitive data during Hurricanes Harvey (2017) and Michael (2018) and during the 2018 Kilauea Volcano Eruption.

The objective of the project is to systematically explore how human-robotic interactions are affected during disaster recovery, specifically pertaining to operator states related to fatigue, stress, and insufficient training. Through this project, we have collected operator physiological and cognitive data during Hurricanes Harvey (2017) and Michael (2018) and during the 2018 Kilauea Volcano Eruption.

Collaborators: TAMU (Murphy, Peres)

Human-Exoskeleton Interactions

Sponsor: TAMU/TEES, NSF, NIOSH

Recent advances in human-robotic cooperation, across the spectrum of passive assistance to powered augmentation, have shown strong potential to reduce injury risks by reducing or transferring biomechanical loading from targeted joints. However, natural human-robot synchrony (i.e., reducing the mismatch between motor, mind, and machine interactions), learnability, and usability of these technological solutions remain untested. The ultimate goal of this study is to improve exoskeleton-workplace safety and productivity by understanding, assessing, and augmenting the neuroergonomic fit exoskeletons during occupationally fatiguing tasks.

Recent advances in human-robotic cooperation, across the spectrum of passive assistance to powered augmentation, have shown strong potential to reduce injury risks by reducing or transferring biomechanical loading from targeted joints. However, natural human-robot synchrony (i.e., reducing the mismatch between motor, mind, and machine interactions), learnability, and usability of these technological solutions remain untested. The ultimate goal of this study is to improve exoskeleton-workplace safety and productivity by understanding, assessing, and augmenting the neuroergonomic fit exoskeletons during occupationally fatiguing tasks.

Collaborators: Ohio State University (Marras), TIRR Memorial Hermann (Chang), NIOSH